Want to know what it takes to be a pro at sound design?

To say that Frank Serafine might know what he's talking about is probably a huge understatement. Frank has been around doing sound design since before many of you were born. He's seen technology change they way that things are done, not just in audio, but in film also.

In this epic chat, Frank and Joey geek out over how he developed sounds such as the Light Cycles in Tron, massive Spacecraft in the Star Trek movie, and many others... all without the benefit of ProTools or other modern bells-and-whistles.

He has a TON of great tips on how to become a sound designer, whether you just want to add sounds to make your animations stand out or maybe even do it as a pro. Take a listen.

Subscribe to our Podcast on iTunes or Stitcher!

Show Notes

ABOUT FRANK

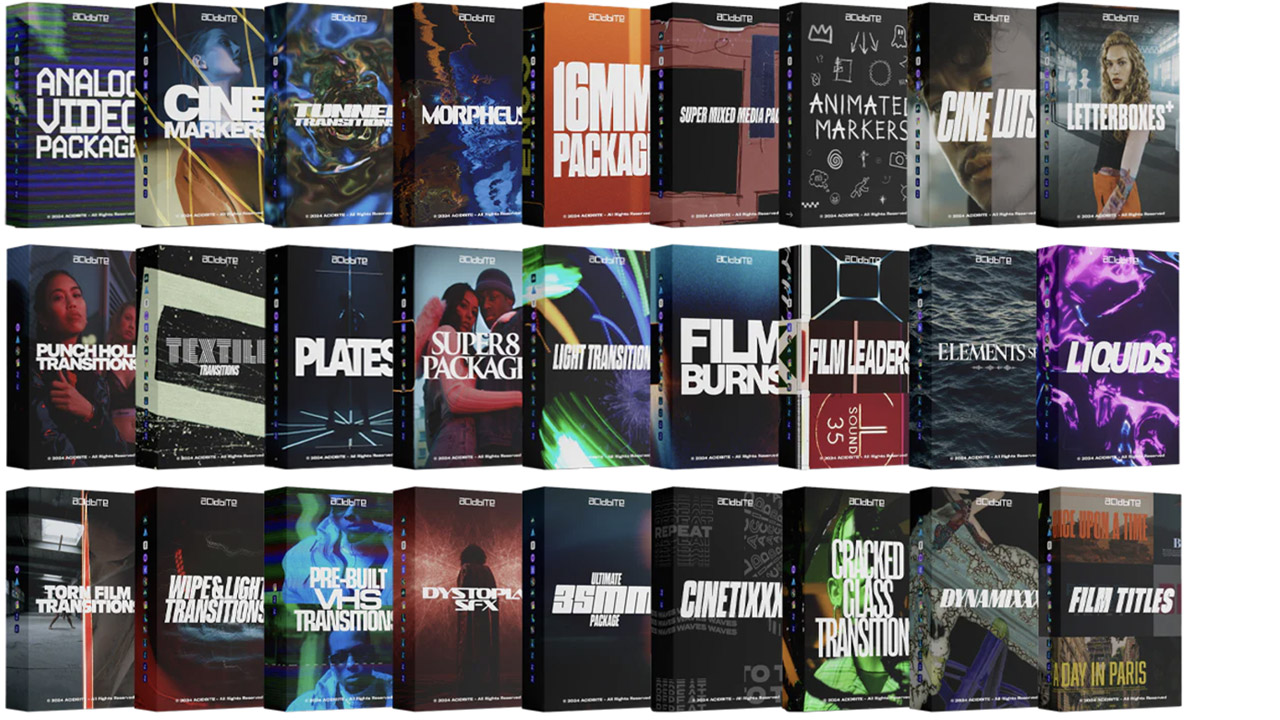

SOFTWARE AND PLUGINS

LEARNING RESOURCES

Pluralsight (Formally Digital Tutors)

STUDIOS

HARDWARE

Zoom Audio

Episode Transcript

Joey: After you listen to this interview with Frank Serafine, sound designer extraordinaire, you're probably going to be very inspired and want to go off and try your hand at sound design and here's some cool news. From November 30th to December 11th of 2015, we are going to be sponsoring a contest in conjunction with soundsnap.com to let you try your hand at sound designing something really cool.

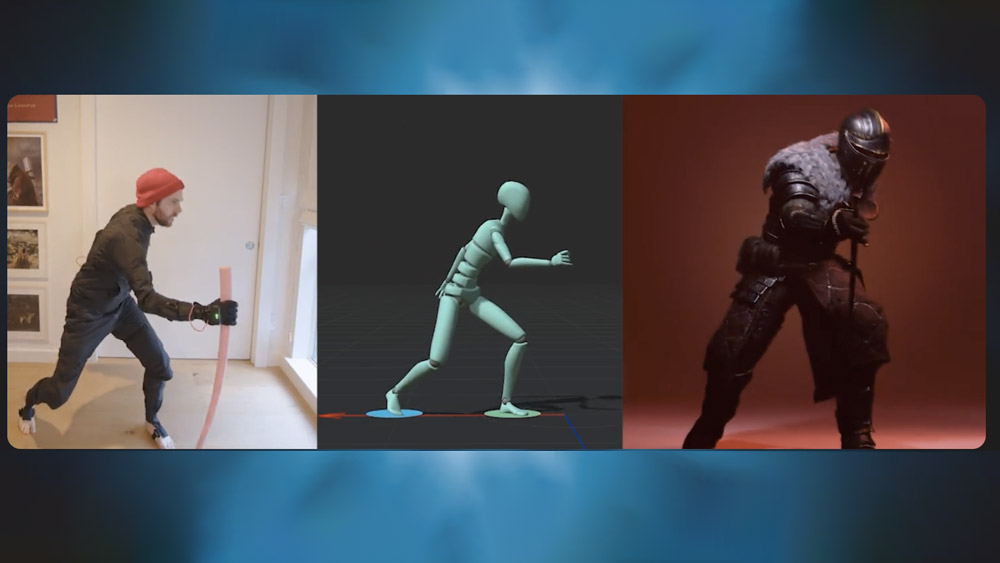

We commissioned Rich Nosworthy to create a very cool short clip. It's all this crazy, techie, 3D and there's no sound on it. What we're going to be giving everyone is that same clip and we're going to give everyone the same bucket of some sound effects from Soundsnap. You can actually download and use these sound effects wherever you want.

We also are going to encourage everyone to take some of the information here in this interview, some of the tricks and tips that Frank talks about and create some of your own sounds, create your own soundtrack to this clip and the winner, and there will be three winners chosen, those three winners will actually get a one-year subscription to Soundsnap to download infinity sound effects.

You can literally get on the website, download every sound effect they have. Then once your subscription is over, you're done and that's what you can win. It's pretty crazy. Stay tuned for more information about that at the end of the interview. If you're on our VIP subscriber list, which you can join for free, we will send out information about that as the date approaches.

This is going to be a short intro because I took a lot of time to say all that stuff. Frank Serafine is a sound designer. He's been doing it for decades. He sound designed the light cycles in the original "Tron". He's basically a part of my childhood, a part of the reason that I got into this industry in the first place. "Tron" was that movie for me that got me into visual effects that led into motion design and the sound was such a huge part of that.

Frank did all those sounds before there was Pro Tools, before there was soundsnap.com or MotionPulse from Video Copilot or any of that stuff. I asked him how he did it. We get deep into the woods. This is a very in-depth, geeky interview with an amazing, brilliant, creative guy who also owns a rooster that you may hear a few times in the background of the interview. I hope you enjoy it and stay tuned at the end for more information about the contest.

Frank, I want to say thank you very much for taking time out of your day. I know you're a busy guy and I'm really looking forward to digging into your brain a little bit.

Frank Serafine: Great. Let's go.

Joey: All right. First thing, I want to get your opinion on, Frank, because you've been doing sound for a long time. I have my own opinion but I'm curious, do you think that sound gets the respect that it deserves as opposed to the visual end of films and videos?

Frank Serafine: No.

Joey: That's an example of an interview question I shouldn't ask, one with a yes or no answer. Would you elaborate on that a little bit? What are your thoughts on that and why don't you think that sound gets the respect it deserves?

Frank Serafine: From the filmmaker perspective, I think everything maybe in this whole world comes down to money in a lot of ways, love and money. If the love is stronger than the money, you're going to make it better and you're going to put the money into it to make it right and it really kind of starts with the production, sound production. If you don't know what you're doing on a set while you're shooting, I mean, I know a lot of your users are visual effects guys and we'll get to that point.

For instance, if you're shooting a "Martian" movie or something like that, that's going to have a ton of visual effects behind it and mats and this and that and the other thing, you have to really get the best production sound you possibly can get. This trickles down to even like the guy who's doing it on an iMovie. That's why sound doesn't get the respect that it needs. When it's good, it's so transparent that you don't realize it because it's good and it brings you in and it makes a movie. That's what makes movies. Audio, music, I mean, a lot of the visual effects people that I know they'll say, hey, take a look at the film without sound in it and what do you have? You have a spectacular 4K home movie that you can look at. Take the visual effect away and you have everything. You have the story, you have the sound, you have the music, you have the emotion, in your head you could watch the movie.

Joey: Right, right. That's really interesting. Do you do you think that for example like the new "Jurassic Park" movie comes out and everyone's talking about the dinosaurs and the effects and this and that and no one is talking about wow, the sounds of the dinosaurs are amazing. Do you think there's something like a little more subliminal about audio than visuals that makes that happen?

Frank Serafine: It gets right down to smoke and mirrors and movie magic, okay, that's all it is. They're doing it on the visual side, but we're doing it on the audio side. Our role is to support the visuals and be transparent so that the minute that you start listening to the music or pulls you out of it then some is wrong. It's all about supporting. I just I just watched "Martian". Have you seen that movie yet?

Joey: I haven't seen it yet. I'm looking forward to it.

Frank Serafine: Oh my God. That is like the perfect movie. You know that most of it is fabricated fully and everything is fabricated in the whole movie but it sounds so damn realistic that you think they shot it on Mars.

Joey: Yeah, yeah. Let's get into how something like that is actually put together and "Martian" might be a good example. When I see the Oscars or something and someone wins the award for sound editing but then there's also sound design and then there's sound mixing and there's different titles and it's like this really big feel. How did all of those things fit together and where do you fit in there?

Frank Serafine: God that is a loaded question. Okay. If you look at "Martian", the credits okay, this is some of Ridley Scott's greatest work. I'm estimating 4000 visual effects credits. Okay. This is what it takes to do the visual effects, 4000 people. Of course, we're the bastard child. We're audio. We're the last thing that ever gets dealt with. It's always the one that the producers are picking through the budget on because they went over on this and that and the other thing and sound is the last thing.

It's usually squeezed to the max and with the technology today with audio, like for instance, I just did a documentary on the life of Yogananda. It's got Steve Jots. It's a pretty amazing documentary film on the first year where they came here from India. I did everything. I did all the dialogue editing on a laptop, a full documentary while the foley, all the sound effects, all the sound design I did all of it because I could. Over the years, I would have to have many editors involved.

Okay. Let's start from the top. You got your basic dialogue editor that usually hires an assistant who takes care of the ... I mean, there's a dialogue editor, the ADR editor, there's a foley editor, there's a sound effects editor which covers basically the tasks of what they ... there's kind of three levels of sound effects, there's hard effects, there's backgrounds and then there's what they called PFX which is called production effects, which you pull out of production. A lot of times, we rely on what's ion production.

The editors are given their tasks and then of course there's the sound designer, which is what I am, which I started my career out as a sound designer. I'm really a composer, I'm a film composer and a musician, started out as a musician. That's how I started out my career. I got in as an electronic music keyboardist on "Star Trek", which was back in 1978, when I actually started working on "Star Trek". One of the ways I got into the industry is I could create sounds on a synthesizer that no dude had ever created before.

Joey: Let's take a step back from it. I want to talk a little bit about a sound editor because I worked as an editor actually before I was an animator so in my head I think I know what editor means. What does that job entail if you're a dialogue editor for example? Is it just taking the recorded dialogue, trimming out the silent parts, is it kind of it?

Frank Serafine: Yeah, yeah, yeah, yeah. That's great. I'm what's called a supervising sound editor slash designer. That's my credit on a film. The reason for that credit, I probably just call myself a sound designer but I am a supervisor because I head the whole ... I'm the one who gets the job, I'm the one who budgets the job, I'm the one who hires all the talents, I'm the one who books all the studios and I watch it from the point they start shooting all the way until I deliver to the final product to the theater. That's my role as a supervising sound editor designer.

I bring everybody in, after the editor locks the prints on a huge movie like "Poltergeist" or "Star Trek" or "One for Red October" any of those movies. Generally, because of when I did the original "Star Trek" film, "Star Trek I" back in '79 Jerry Goldsmith was the composer and I was just a young 24-year-old music guy that could create sounds on a synthesizer and I came in and I started collaborating Jerry Goldsmith scores with huge spaceship rumbles and lasers all over the place and it freaked him out because a lot of his music got dumped because we brought in a lot of effects.

When I did "Poltergeist", I insisted that Jerry Goldsmith come in on our spotting sessions from the very beginning so I like to bring everybody in including the composer, the editor, all of my team which consists of a dialogue editor, an ADR editor, two or three sound effects editors generally, that's pretty much the team of sound editors. They come in and spot. We look at everything. We basically determine from the top what everybody's going to do but we start with dialogue and we go through and we analyze all the issues.

The editor is a very vital component for me to have there because the editor knows the film better than anybody can tell us exactly what any issues are. Generally, a film editor temps in sound effects before we even get around. He's pulling stuff out of libraries because the director wants to hear shit in the edit room. I usually supply things if there's any … especially if I'm doing a sequel or something, I end up giving a lot of effects that I did on the original film to the editor and we're usually there on a big film to help the temp get to the point where they're locked print because on a big film, we usually do several what they call temp dubs which are ... as the film is evolving, it might not be cut yet completely because it goes through a lot of processes where the studio wants to show it to what's called focus groups. Where they send it out to the public to scrutinize the film and oftentimes, it's very valuable to the filmmaker or the studio to get just like the layman's feedback on some of the storylines or why is this that or why did that guy scratch his butt over there, whatever. You know what I mean? Something that we don't see because when we're working on the film, everyday a lot of continuity errors and things like that just run by us.

Joey: Right. Too close to it.

Frank Serafine: A lot of times, it's really fresh and you'll find a lot of films get edited in that last stage of finishing up. We have a lot of edits sometimes because they're taking things out, they're trimming things, they're adding things in and then we have to go through and confirm all that material.

Joey: I get it now. Okay.

Frank Serafine: Then it gets pumped back to the editors. Then the dialogue editor, just starting with him, what he does is he takes all the dialogue, finds out what the funky parts are. I spot it for the dialogue editor generally because I'm more experienced. I've been around. I've been doing films for close to almost 40 years in Hollywood now. I've done hundreds of television episodes and I just like have x-ray vision when it comes to knowing whether or not to bring an actor in or not, if we can fix it. It used to be that a lot of this stuff we couldn't fix because the tools were not what we have today.

Joey: What are some of the things that you're looking for that might not be fixable?

Frank Serafine: Back in the day, we could never take mic bumps out. You couldn't EQ a mic bump. If somebody bumped the microphone in production, you were screwed. Or for instance, when we did "Lawnmower Man" we had a cricket in the warehouse that they couldn't exterminate, they couldn't find out how to get to it.

Joey: That's an expensive cricket then.

Frank Serafine: Yeah. No, I'm serious. That cricket caused the production. Let me tell you, in the end Pierce Brosnan was banging the walls, that ADR write, but when you watch that film, you don't have a clue that that is ADR. It sounds amazing. We couldn't have gotten that good of sound in that warehouse.

First of all, the problem was it was just a warehouse, it wasn't a sound stage that was totally soundproofed. It was very echoey. That's okay for some things but they would ... like for instance, they build the sets inside the warehouse and like Jeff Fahey was in a small little shed, a shack in the back of the church. Every time he would say something a little bit loud, it would sound like you were in a warehouse.

Now we have tools. There's a company called Zynaptiq, that ... it's called a plug-in called D-Verb and what it doesn't is it eliminates the reverb in the track and that's a big, big advancement for us.

Joey: That's huge. I honestly didn't even know that was possible. That's really cool.

Frank Serafine: Yeah. It's called Zniptic. It's a Z-N-I-P–T-I-C.

Joey: Cool. Yeah, we're going to have show notes for this interview so any little tools like that, we'll link to it so people can check it out. Okay. I think I'm wrapping my head a little bit more about this. Obviously, there's a lot of just tedious manual labor in assembling and conforming. You, as the supervising sound editor and sound designer, are you also involved in then the mixing of these, I'm assuming, hundreds of tracks of audio?

Frank Serafine: Yes. I'm the supervisor and I have to sit on the stage with a mixer so he knows the show. The very first mixer that I encounter is my foley and ADR mixer because they're actually recording a foley and they're recording the ADR. I supervise those sessions because I need to direct the actor ... I mean, oftentimes, if I'm doing television I'll never see a director in the ADR room. I'll have somebody like Christopher Lloyd or Pam Anderson or somebody like that arrive to do "Bay Watch" that's one where we did ADR on everything because it was shot on the beach in Los Angeles. Overall the dialogue, we had a very ... we probably had the best production recordist in the union working on that show. When you have like ocean in the background there's no way to take that ocean out.

Television is on kind of a heavy budget when it comes to the director especially, they don't pay them to come to an ADR session so I directed all those actors back then. You really need an experienced ADR supervisor when it comes to that type of work because if the actor is not enunciating or he's too far away or too close to the microphone, it comes time for you to try and match up the original production that may be another guy on the other side. The problem is in dialogue, like for instance, the dialogue editor, one of the tasks that he has when he goes in to do his dialogue editing is he needs to split every actor.

Splitting is going through basically the scene which is all recorded on one track unless you have lavaliers on each actor, right? The boom is basically picking up both actors and that's usually the best sound quality is the boom microphone. The dialogue editor goes through and he has to split out each actor and put them on their own separate channel so that we can bring ... It's like say if one actor's too loud then we can bring him down a little bit without affecting the whole track.

Joey: Got it. I'm assuming that nowadays it's all done Pro Tools and probably pretty quickly but when you were getting started, how was that process done?

Frank Serafine: That's really great, great. I mean, I'm glad you brought that up because you probably know of a program called PlualEyes?

Joey: Yes.

Frank Serafine: Well back in the day like for instance Baywatch or any of the television shows I was working on or any the films everything was sent to us on DATs. Back then they were time-coded DATs that we would get and we put in the DAT player and then they had what was called an edit decision list, an EDL. We would go through that edit decision list and every scene that was in our lock print would go to that particular dialogue on that particular DAT and time code number and actually pump it into Pro Tools through an edit decision list. That's the way we've been doing it for the last 25, 30 years until PluralEyes.

Now it's just incredible because we don't have to do that anymore. We don't have to look at time codes. We don't have to record with time code but we still do as a backup but everything basically sees the waveform, tracks the waveform in production and snaps all the production, the high-quality DAT recorded or media-recorded field material and it basically lines up all our dialogue for us.

That's a huge advancement for us because that used to really be a huge time consuming and technical and not a lot of fun project.

Joey: Yeah, you're bringing me back to film school. I mean this is originally how I learned it. I was at the very tail end of that. Just for anyone who's listening who doesn't know, PluralEyes is this amazing program. I use it all the time. It basically sinks audio tracks together that are not in sync and it figures out by voodoo and magic and maybe some, I don't know, the blood of a virgin or something and it syncs it all in like seconds. Yeah, I remember that used to be someone's job was to sync production audio with the dailies. I mean that could take someone a couple of days to do and now it's a button.

Frank Serafine: It's really science at its best and what it does is it looks at the camera audio, which is funky, usually. Then it goes to the sound guy's data clips and it locates them. It looks at the waveforms, it's very scientific. There's nothing more detailed than a waveform, an audio waveform. It's like a fingerprint that it just goes and it locates it and it embeds it right into the production timeline of whatever you have. These days I'm working in all three editors because you have to be really multi-abled.

Joey: Yeah, disciplinary.

Frank Serafine: Yeah and Final Cut X, Final Cut 7 which some people are still working in then you've got Avid and then you have Premiere. Premiere, most of the editors these days because they bailed when Apple released Final Cut X which is now … I mean, I'm a total believer of Final Cut X and where audio is going with them. I think they're probably the most advanced when it comes to audio but we're still in the embryonic stages as to how to deal with a clip-based system when it comes to mixing. We've been evolving with digital.

I was the first to use Pro Tools in a major motion picture back in 1991. Actually might have been a little bit earlier than '91.

Joey: What film was that?

Frank Serafine: It was Hunt for Red Octobe which won the Oscar for best sound effects and for best sound editing, sound effects editing. I was the sound designer on that film and no one had ever used Pro Tools on a major motion picture before me but we haven't cut dialogue on it. That was all still being done on 35-millimeter mag. The only thing that Pro Tools was used for was the sound design and then we would dump that to a 24-track and then that got mixed on the dub stage.

Joey: How are you using Pro Tools in your role as a sound designer the best it out how are you using Pro Tools in your role as a sound designer on that?

Frank Serafine: Well, back then it was for the best sound that we could get because I don't know if you go back this far but 24-track unless you had four or five of them and in synchronizers and quarter-inch decks and all this stuff that ran off time code it was kind of a nightmare, to tell you the truth. You know what I mean? To manage all this multitrack and how do you edit on multitrack.

Now I did the sound design back there which is important back when I did Hunt for Red October. It's important to know that I did most the sound design on emulators. The Emulator 3 or 2, back then it was the Emulator 2.

Joey: Is that a synthesizer or is that …

Frank Serafine: Yeah, yeah, it's a sampler and that was at the age of samplers because there was no way and to this day, there is no way to manipulate the audio like we were able to do with the electronic musical instruments because we take a sample then we would actually perform it on the keyboard. Sometimes we need to lower it a little bit, maybe raise the pitch, lower it, raise the pitch. Speed it a little bit. If we raise the pitch higher on the keyboard, it would go faster. Sometimes we didn't want it to go fast so we take a pitch shifter and we'd lower the pitch so it sounded like the original but we get it perfectly in sync by squeezing it or stretching.

That's how we were able to get things in sync back then and then we would dump that to Pro Tools and then that would get mixed on the dub stage on Pro Tools and that was the first time we ever did that. Back then on Hunt for Red October the dialogue was cut on mag, the sound effects, they were created on an emulator and then transferred to Pro Tools. Then that was actually transferred to Mac back then and then all those tracks were in a 35-millimeter mag mixing stage.

See no one would let us cut. It was a big deal back then because it was kind of … First of all, the unions didn't like that we were bringing digital into the industry because it was going to affect the skilled workers who've been in the industry for many years on 35-millimeter movie, all the workers. That came into play and also, Pro Tools wasn't quite there yet as far as cutting dialogue on Hunt for Red October so the next movie that came along that I did I ended up by building a big studio in Venice Beach right there off Abbot Kinney. It was a 10,000 square-foot film. I had a THX film mixing stage and nine studios.

We were at the head of the curve. I had Pro Tools. The director on Lawnmower Man was willing to let me do the whole production. He didn't care how I did it. He just gave me a budget and said, "Frank, you're the guy. Do it however you want. We actually did the R&D and we edited all the dialogue and all the sound effects and everything on that film on Pro Tools and that was the first film to actually implement Pro Tools from the lock print stage all the way to the final mix.

Joey: That's interesting. This is a good segue, I think. Let's dig in a little bit to the actual process of sound design. Most of the people who are on my site and my audience, they're animators and a lot of what they're animating it's not like a concrete literal thing like a bomb exploding or a horse galloping or something where there's an obvious place to start for sound design. It's a user interface like a button being pushed or like some window opening on a computer program or just some abstract looking thing.

Where I'd like to start I guess is asking you a very loaded question, why can't we as motion designers just go buy a big sound effects library and use that stuff? Why do we need sound designers?

Frank Serafine: You can and animators are hugely creative and a lot of the picture editors I know are sound editors and they come to me and they ask for sound effects, whether I can supply them, especially on lower budget projects. I actually encourage the editors now because like for instance in Adobe. Chances are you're going to be in Premiere anyhow and there's a program called Audition that is the sound component for Adobe. Well, it turns out that that's a very sophisticated sound editor and chances are if you're doing a project where you're not going to go to Universal to mix it which requires a big huge Pro Tools. I think they have something like 300 Pro Tools systems in their machine room with like consoles like 300 channel icon Pro Tools consoles.

That's what it takes to do a Martian movie or any of these big Atmos, Dolby Atmos theaters because Dolby Atmos has 64 speakers in the theater now. You just have to have 64 channels for the actual output alone. There's no audition console available but I encourage editors to go in and start working with audition because there's some very sophisticated tools that don't even exist in Pro Tools or Logic and vice versa. Like when I go to create sound effects, I don't do it on Pro Tools and I don't do it in Audition. I use Apple's Logic because I'm still using synthesizers. That's how I create sound effects, a lot of sound effects.

Actually, we'll probably get into this with your listeners, how to create special sound effects with the latest synthesizer plug-ins from Arturia.

Joey: Yeah, I'd love to talk about some of that stuff and I guess at what point do you think you've crossed this line where a sound effects library is no longer going to cut it and now you need someone like Frank Serafine to come in and do his black magic. What do you think the threshold is for that?

Frank Serafine: Well, first of all, if you're an animator, chances are you're not going to go out and spend 35,000 to get a professional sound effects library.

Joey: Probably not.

Frank Serafine: You know what I mean? You're not going to be in the business like we are because that's what we do. It's just like if you wanted to say, "Hey Frank. I know you're doing this incredible sound design work and we go to an animator. I want to animate my sound design." It's like are you kidding me? I don't know how to use that software. I don't know how to do this one.

Joey: Yeah, I think there's and this ties into what we talked about originally where sound doesn't get the respect that it deserves and maybe part of it is when the average person sees some amazing special effect on screen, they understand on some level how difficult it is to do that but when they hear something that was really beautifully recorded and engineered and mixed, they have no clue what it took to make that and there's no evidence on screen of how hard that was.

Frank Serafine: That's true because it's the most ... I loved watching The Martian the last couple of days because it's not only the sound designer but it's the director, how the director is creating his vision because that film, there's a lot of sections where there's no music at all. They just rely on the sound effects which is a new style.

On big films especially, you've got to have a sound designer. You can't do it on your own. You know what I mean?

Joey: Totally. How does the director interface with the sound designer? Because we haven't really gotten into it yet but if you're using synths and plugins and outboard gear and stuff like that to make these sounds, even Ridley Scott probably isn't that sophisticated in terms of all the sound gear that's out there, so how does the director put his vision into your head in a way where then you can make stuff?

Frank Serafine: Well, actually the director is the source of inspiration and the source of knowing his movie. Like a lot of times I don't know what the hell I'm doing when it comes to spotting. I'll look at the film and I don't see things that the director will. I just did this film called Voodoo and it's a little independent film, a horror film, and there were so many thing because the film was so dark because it takes place in hell and in his head, there's rats running all over the place and scampering around and torture chambers down the hallway.

How would I even know that? I need to pick the director's brain because it's their film. It's the director's vision and all the ideas and inspirations, really, I mean a lot of it comes for me because I've worked with so many great directors that they've been my mentor because a lot of these guys know sound better than I do. Like Brett Leonard, the Lawnmower Man director, he was at the very beginning with holophonic audio. He did his own 3D audio for his first film back when he was young. He was basically a sound guy and Francis Coppola, for instance, was a boom operator before he became a director.

When you are an audio guy you realize how important audio is and that's why you look at Francis Coppola's films or George Lucas or any of these big moviemakers, because they understand how important audio is, that's why their movies are so incredible.

Joey: Is that a real rooster or is that a sound effect?

Frank Serafine: That's Johnny Junior, my rooster. He loves me.

Joey: That's awesome. I was trying to figure out like are you mixing something right now.

Frank Serafine: I put him in stuff all over the place.

Joey: Yeah, it'll be like the Wilhelm Scream just ends up in every phrase there.

Frank Serafine: There's a zoom video that they did and it started at four in the morning and it overlooks the valley and everything here at my place. I just took this crow and the sun's coming up. He sounds incredible and I recorded it at the best quality with a shotgun microphone. That's another thing that we probably should talk about is the microphones that that you need to select for recording out in the field is a very important process.

Joey: Definitely. Let's start getting into the nitty-gritty. Looking through your IMDB profile was intimidating. I grew up in the '80s and you have the original "Tron" which was a big movie in my childhood. It's funny because that movie broke a lot of ground visually but now having done some research I know that even in the audio realm, there was a lot of neat stuff going on there, probably at a time when you didn't have like all these software since you have now and you had to do it all old school with gear.

I want to just hear your process. How did you come up with what the light cycle should sound like and then how did you know what synth you wanted to use? How does any of that start to come together?

Frank Serafine: Now it's experience for me because I've done so many films that I just … it's second nature. Back then, that was one of the first computer animated film. I really relied on both the tools back then, I mean, that was really primitive because that was the very beginning of Apple and Atari. That was the beginning of the computer revolution because it was computer animation and we were at the forefront of computer for audio.

However, there wasn't any computer control for audio back then, except for what we had. We had a synchronizer that locked in a very primitive three-quarter inch of UHF, they called it, video tape recorder which we had Jimmy rigged up to like what was called a CMX audio head, which we connect it to the video tape, which would read the second channel as this empty time code channel that was sent over to not even a 24-track, it was a 2-inch 16-track that we had synchronized and then, I have what was called a Fairlight, which was the very first, and it was an 8-bit, it wasn't even 16-bit back then. It was an 8-bit. The thing cost like 50 grand or something back then.

It was the first sampler because what I found, there's a lot of laws of physics when it comes to audio, especially with the light cycles, it's very difficult to simulate a Doppler with anything other than recording a Doppler.

Joey: Right.

Frank Serafine: On many of the scenes I performed, all of those light cycles is though I were sitting on the actual motorcycle and I performed them using my Prophet-5 synthesizer and I changed gears and manipulated all of the motor sounds with my pitch wheel.

Joey: That's amazing.

Frank Serafine: Okay. Then, I went out in the field with, what back then was a Nagra, that's what they used to record analog production on the set and I would strap a Nagra to these race card drivers out there, it's called the Rock Store which is a big place where all the racers go, where the cops don't come and bother you and you can just come out in the middle of nowhere and just like, romp through the hills.

Joey: Yeah.

Frank Serafine: We ended up taking out motorcycles and strapping the cyclist with the Nagra, the whole recording rig and having them drive through the mountains with it. We did a bunch of Doppler recordings where we would stand in ones location, have them speed us at 130 miles an hour, that kind of stuff. Then, I took all those of elements and I put them in to my Fairlight, everything was logged. We would come back with all our quarter-inch tapes and back then, I used, I was sponsored by Microsoft Word because that was the program where I could input …

Joey: That's perfect.

Frank Serafine: … all the information kind of like an Excel spreadsheet and then, I could locate my, it was my first search tool. It was really the first search tool where I could put in like motorcycle passed by and I would be able to tell what tape it was on then, then I'd go grab the tape out of my library, the quarter-inch tape then I'd stick it up on my quarter-inch deck then I'd push play, I'd sample it on to the Fairlight and then, I would actually perform it on the keyboard, once again, depending on how I squeeze it, I would play it up higher or lower in pitch and raise the speed of it.

Oftentimes, it didn't matter whether we lowered the pitch or raise the pitch. We wanted it to sound electronic anyhow. There was certain ALS thing in digital back then because it was only 8-bit. We kind of like the sound of it because it would be kind of breaking up a little bit but it sounded digital and that's what we wanted for "Tron".

Joey: Now, that's so cool like that idea that you didn't just make the sounds and then point and click and then playback and see how it sounded. You're really watching the picture and performing the sound effects.

Frank Serafine: Yeah. All performing, opening filters because there was no computer control of it back then. If I wanted to go around the corner, I'd sit there and I go, and I turn like the contour knob and it would totally make a wild synthesizer pitchy weird thing and create this wild sound that it wasn't computerized or automated back then. Everything was live. I was performing like a musician within an orchestra.

Joey: Do you think that you need a musical background to be really effective at this kind of stuff?

Frank Serafine: God, dude, you hit it so right on because I think everybody I know, the greatest sound designers I know have all come from a musical background. All of my favorite, like Ben Burtt who did Star Wars, I mean, all the guys that I've mentored, Elmo Weber, they've all been composers and they understand musicians and they understand the emotion. First of all, have the emotional and inspirational element that it takes to create sound design because you really … sound design is really orchestration using sounds.

You're actually creating to the picture, that's a little different with music. Music sometimes you don't want to be on the notes. You don't want to hit it. You want to be a little laggy or you want to hit pass the picture cut. There's a lot of things that you do in music that if you were just sitting there, just playing it and try to become like a Bugs Bunny cartoon. That's why music is like so subjective and just creates an atmosphere for mood and then sound design actually comes in and creates the believability of what's going on with the picture.

Joey: Are there any correlations that you found with musical theory and what you do with sound design, like a good example would be if you need something to feel ominous, right? In music, you might have like dissonant notes playing or some deeper notes. Then, in sound design, would you think in the same level like well a sound effect with more low-end or something really low-end, ultralow frequency stuff, is that really going to feel ominous the way music would? Does any of that correlate?

Frank Serafine: Yeah. It does. Like a composer, like Mozart and some of the great composers. I work with this composer, Stephane Deriau-Reine and these guys are so schooled that they never sit at a keyboard, they write it down on paper out of their head.

Joey: That's crazy.

Frank Serafine: You what I mean, like Mozart wrote and Bach, all those guys, they never sat at a keyboard and wrote their compositions out. They wrote it out on paper first and then they'd sit at the keyboard and play it. That's kind of how sound design is. You hear it in your head, you spot it on a piece of paper and you write all the elements that you think it's going to take to make that sound. Then, you go through your libraries and you start picking what you need. Oftentimes, I can't find anything in libraries. That's what I do, I'm a library provider, I'm one of the top independent sound effects library companies out there.

Mainly, I go out and record my own stuff because I look in a library and I find out where all the holes are because I have everybody's library. I have every sound effects library on the planet. I usually go, I like to do when I come on to a film, I start spotting through and I start cherry picking through the library. Most of the time, I end up with everything out of my libraries. My library is popped up with the best stuff generally because I'm a sound editor so when I go out to record sounds I know exactly what a sound editor wants to hear.

Joey: Right.

Frank Serafine: A lot of these guys that go out and record sound effects, they're not sound editors. They're somebody from Buffalo in New York that's making a sound effects library and they recorded a snow plough and this is what it is.

Joey: That's what I think of when I hear sound effects library, I think of rain falling on concrete and then, rain falling on snow and then, leather shoe on wooden floor, that kind of stuff.

Frank Serafine: That's all fine but a lot of those guys, they don't understand that when they go out to record like for instance in Urban Sound, like what you're looking for in an Urban Sound, one of the most popular sound I have is three-block away dog. You got the crickets, because that three-block away dog is the hardest thing to create because you got to go get a dog out of the library and you got to make him sound like he's three blocks away, which doesn't work very well because that's very complicated algorithm. To create a dog echoing three blocks away is, that dog is bouncing off this side of the building, he's sitting in the tree, he's bouncing off the church. It creates a very unique like I said, convolution reverb is what it is technically.

That's so, when I go out to record I'm listening because I know exactly what a sound editor is going to want to hear so I go out and I fill the holes and I generally, on a film, 99% of the effects on every film that I work on is generally stuff I go out and rerecord. Only because I want in my library because I'll go to a library and I go, oh, man, that mocking bird is incredible, I'll never get anything better than that because where am I going to even find a mocking bird. I'll end up probably using those mocking birds if that's what I have to specifically get.

I'll get the best one out in the library. Chances are, anything else, any backgrounds, anything at all, I go out because first of all, the technology is changing, every year there's better sounding stuff, better sounding recording gear. I just acquired the new Zoom F8. It's eight channels of portable 192 kilohertz, 24-bit resolution audio quality. Back in the day, it would cost you 10 grand, now it's $1,000.

Joey: It's like the Zoom, because I have the H4n, Zoom H4n, is that kind of like the big brother?

Frank Serafine: I would say it's his godfather.

Joey: Yeah.

Frank Serafine: It's not even a brother, it's a godfather.

Joey: Yeah.

Frank Serafine: It's eight channels of battery powers. A highest resolution you can find out there and it has 50-time codes so I've been locking two of them together so when I go out into the field, I have 16 channels of location microphones.

Joey: Does that let you really like if you need the ambience sound of an environment, you can actually go out and play 16 microphones and capture that, is that kind of how you'd use that?

Frank Serafine: That's exactly what I'm doing because now with Dolby Atmos, you have 64 speakers that you have to fill, right? What I do is I go out with what's called the Holophone. It's an eight channel microphone that simulates the human skull. It's got eight mics in it. That's for one of them and that simulates what we hear as a human in the upper spheres, anything bouncing in the upper area of the atmosphere that we hear which is probably 50% of our hearing is above our head and that's never existed in a theater until Atmos.

Then, the other one, I'm using a super high quality microphones. I'm using this DPA microphones that record way beyond the human range, frequencies that only bats could hear or rats. Super high frequencies and you ask me, why would we ever want to record at that level? Remember when I was talking about slowing things down and speeding things up?

Joey: Sure.

Frank Serafine: Okay. The resolution of 192 kilohertz, the reason why we like to record sound effects at that resolution is the same sort of principle that we have with 4k or any of these video formats is that, it's almost like pixels and that the higher the resolution you have, when you got to manipulate the audio and you lower it down two octaves, like say for instance my rooster there, say I record them at 192 and bring him down two octaves, he will sound like a dinosaur from Jurassic World.

Joey: Right.

Frank Serafine: He'll rumble the whole place and there won't be any digital degradation or ALS seen in the audio signal.

Joey: That's a really good analogy you made. It's like the 4K thing for sure but even more it's almost like the dynamic range. It used to be that you have to shoot on film if you wanted to really push the color correction because otherwise, with video, you start to get pixels and it breaks up and I can totally see what you're saying if you have more samples with audio, you can totally manipulate it and you won't get that like choppy digital grating sound. That's awesome.

Frank Serafine: Right. That's really the secret because a lot of people are like, oh, man, you're recording at 192, you can't hear that, only bats can hear that. It's like, yeah, only bats can hear it, which is cool, but wait until I turn it down three octaves or five octaves.

You're going to go, wow. That sounds so realistic and this is like … or you bring it up, the same thing happens when you bring it up in pitch.

Joey: Yeah.

Frank Serafine: I've been doing this for years. I used to do it back when I had to manipulate like those motorcycles in "Tron", I had to do it on a keyboard. That was always the problem because once you start like manipulating the pitch on especially, it starts breaking up but we're beyond that age now. You're going to hear sound effects in the next 10 years that I mean, sound is evolving just so quickly now.

Joey: Let's take into a little bit the whole synthesizer and completely fabricated sounds where you're not using microphone at all, you're just making them … and I assume now it's mostly in the computer, what is that process like? Why don't you start with what it was like? Now, what it's like, what's the future of that?

Frank Serafine: First of all, synthesizers for me, it's probably the biggest inspiration in music. When watching "Martian" for instance, a lot of those big movies, they never bring synthesizer music in. It's all like big super orchestral and that's about it.

Joey: Yeah.

Frank Serafine: I love "Martian" for the music score because it's full, heavy-duty, Jerry Goldsmith, John Williams styled orchestral score but then it's like Deathpunk, like super high-end electronic elements in the music which I love because that's the future of man. I think electronic music is like we're going to … God, it thrills me to see where it's going. Everything I listen to on the radio, even the pop music, it's like stuff that used to be really kind of difficult to create back in the day. When I did "Star Trek", I was a little guy, I was just this young kid that owned a Prophet-5. I didn't even own the Prophet-5 back then. I was in my early 20's and I had to go beg my family because I was doing "Star Trek". You got to give me a loan. I got to get a Prophet-5. I just had a Minimoog. I'm doing this big movie out here in Hollywood, dad, come on, can't you break loose with a little go.

He actually loaned me the money. It was five grand and I bought a Prophet-5 for "Star Trek". I had a Minimoog and I had a Prophet-5 and that's all I had. I had to make do with those tools and boy I learned how to manipulate those synthesizers to the max. To this day, that's kind of where I go. I go right to my Minimoog. Anyhow, I used to own 55 synthesizers by the time I did "Star Trek" and "Tron", through the '90s, I had rooms filled with synthesizers.

Joey: Wow.

Frank Serafine: I mean, 55 synthesizers are not that much because one of my friend's name is Michael Boddicker. He did all the Michael Jackson records and everything. He owns 2,500 synthesizers.

Joey: What's the main difference, like what differentiates a synthesizer and forgive me because I've never actually used one so, what are you looking for in a synthesizer that differentiates the Prophet-5 from one that you didn't want?

Frank Serafine: Back then, my Minimoog was so spectacular that when the Prophet-5 came out, it wasn't even fully polyphonic, it was five notes, that's why they call it Prophet-5.

Joey: Yup.

Frank Serafine: It had five oscillators like my Minimoog, that only have one. I could only play one note at a time. It gave me more sounds and it gave more flexibility. To tell you the truth, these were the only tools you could really afford too. Back then, there was the modular mode Moog but that was, I think, it was $30,000 or $40,000 for that synthesizer.

Joey: Wow!

Frank Serafine: Only Herbie Hancock or like Electric Light Orchestra or who was the other, Emerson, Lake & Palmer, all those major guys had them but those were big touring groups that were pulling down big bucks that could afford big synthesizers like that.

Joey: Right.

Frank Serafine: I got in with the Prophet. I could create sounds that no man had ever created before and I broke into the Hollywood sound business as a kid. Today, it would be a lot rougher because I was able to kind of sneak on to the Paramount lot, this was way before 9/11. Now, you cannot get on the Paramount lot without them scanning your whole body.

Joey: Yeah.

Frank Serafine: Back then, I was able to kind of sneak on with a lot, go over and meet all the sound editors. I brought my cassette player with me. I play all the synthesizer sounds for them and they were like, wow, cool.

Joey: Yeah.

Frank Serafine: I got in back when I was pioneering all this stuff. Nobody even have these synthesizers but since I was kind of a session player around L.A., that's kind of how … my first job in L.A. was I performed live at Space Mountain at Disneyland.

Joey: Cool. That's perfect.

Frank Serafine: Since I served my entertainment country, I was like …

Joey: Yeah.

Frank Serafine: I almost feel like I was in the military for that one. Then I started working at the studios up at Disney and then, worked on the Black Hole and then, Paramount heard about me, creating this weird black hole sounds and they ended up hiring me on "Star Trek".

That's kind of how I got into the business. Now, everybody has got a synthesizer and kind of getting back to that. I'm working with Arturia and that company acquired all the rights to every synthesizer ever made back in the '70s, '80s and '90s and they actually worked very closely with Robert Moog who developed a lot of the early synthesizers. He came in like for instance, the Minimoog, one of the reasons why I kind of let go and I kind of sorry, I did let my Minimoog go because they're worth like between $6,000 and $8,000 now. When I bought mine, I was like 19-years-old I think I got it for $500 or something.

Joey: Yeah.

Frank Serafine: Now, they were six or eight. Once I saw the digital revolution and Arturia come along and developing the software to plug-ins that emulate all these synthesizers, I immediately just sold all my synthesizers and I just got into doing it all virtually. The problem with that over the years was that, okay, here's the Minimoog but how do I turn the know on it with a mouse?

Joey: Right.

Frank Serafine: Back in the day, with five fingers when I was doing "Star Trek" and "Tron", those five fingers, I would tape down a note on the Prophet and I'd sit there and I'd manipulate the contour and the frequency and the attack and the delay. On my left hand, I'd be modulating and I'd be doing all … my hands were turning all the knobs all the time.

Joey: Right. It gets back to that performing thing. You were not just creating sounds and then editing them in, you were actually making them in real time.

Frank Serafine: Yeah. Making them in real time and creating something with everything that was at my finger tips as to say.

Joey: Was there other gear too or was it just the synthesizer because I've been in a lot of studios, I've seen rooms filled with outboard gear and a lot of guys swear. You have to have this compressor and this preamp and this and that. Was there any of that going on with the "Tron" stuff or was it pretty much, here's the sounds coming right out of the Prophet-5?

Frank Serafine: Oh, no, no. I had racks and racks of outboard gear. Harmonizers, flangers, delays, y Expressers, pitch to voltage converters.

Joey: It's like a dark art understanding all that stuff.

Frank Serafine: Yeah. It was kind of cool, there'll never be sounds like for instance in "Tron", as they de-res.

Joey: Yup.

Frank Serafine: You know that sound? All of this is like an experimental process, playing around with this stuff because no one's every done it before. On that sound, that was a really interesting one because I took a microphone and ran it through the pitch to voltage converter, back then, it was a rolling pitch to voltage converter, right? Because we didn't even have Mini back then, this was pre-mini.

The pitch to voltage converter went in to my Minimoog, right, and then, I would watch the picture and I took a microphone and I fed back like Jimi Hendrix through the PA speakers and through the studio speakers and the feedback controlled the pitch to voltage convertersm which made the sound on the Minimoog. I actually rattled the microphone in my hand as I watch the picture and fed it back through the speakers. It was actually feedback that was manipulating the oscillators and the synthesizer.

Joey: It sounds very much like you're a mad scientist, like you just throw in stuff at the wall, you're just trying this into this, into this. Does it still work that way today?

Frank Serafine: No, not at all. Not at all.

Joey: It's kind of sad.

Frank Serafine: It's so sterile.

Joey: That's sad. I want to know how would the average sound designer, someone who's in their early 20's and they've never used anything but Pro Tools and digital. What is the current process for creating stuff like that look like?

Frank Serafine: Okay. When it comes to synthesizers, okay, it's an unbelievable time for you to go out and just now, it's like, okay, you can go ahead and buy this Arturia plug-ins. It used to be through Arturia, they were between like $300 and $600 a plug-in, okay, for each synthesizer, a Minimoog, a Prophet-5, the CS80, the Matrix, the ARB-2600, the modular Moog, I mean, it goes on-and-on-and-on. I think you get like 20 synthesizers. Now the whole bundle for $300.

Joey: Wow.

Frank Serafine: You probably have like $150,000 where the synthesizer power, which I would add to payback in the day to hone the hardware for that stuff. Now for $300, you have it at your fingertips.

Joey: Does it sound as good as the real thing?

Frank Serafine: It sounds better because back in the day, and one of the reasons why I sold my Minimoog was that, it had hiss, it had crackles, it was transistors and capacitors and the thing would not stay in tune. It was beautiful but it was just imperfect. When I brought the first Prophet-5 out there, it was called the Prophet-5 Rev 2, to think it would not stay in tune. It was beautiful. Everybody loved the Rev 2 because the oscillators were so warm and gorgeous but you could never keep it in tune.

There were problems with those synthesizers. What I loved about the resurrection of all of these metal hardware tools into the software environment was that like, for instance, Robert Moog who developed the Minimoog and the modular Moog, I mean, just a bunch … he was the pioneer and godfather of electronic music. He came back, as they were developing before he died, he worked with Arturia and he fixed many of the problems that he could not fix in his synthesizers with analog circuitry. He was able to actually go in when they were writing the code for the software and actually fixed a lot of the problems in the Minimoog for the virtual set.

Joey: Wow!

Frank Serafine: When you play really low base sounds, the capacitors couldn't like handle it back then. Now the base is like, whoa.

Joey: Yeah. It's perfect.

Frank Serafine: Yeah. It's perfect, really.

Joey: Do you get any backlash because I mean, I know in the music recording world, there's a big divide still between analog and digital, is that happening in sound design as well?

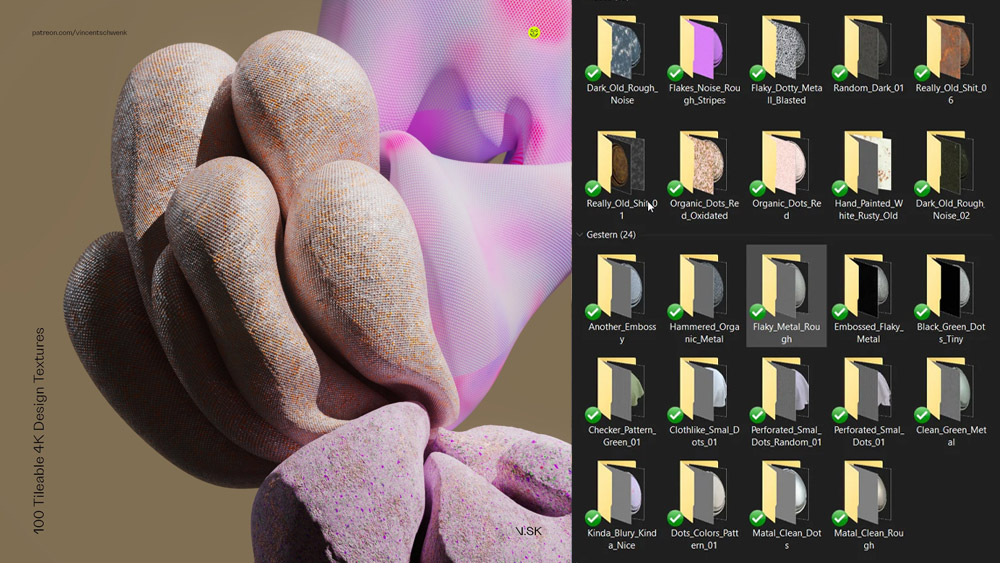

Frank Serafine: No. I don't think so. No. It's not that critical like especially for sound designers and I've just got to stress this one, for you to find a Minimoog it's going to cost you $8,000, okay. That's a pretty big chunk of money. You can go out there and you can buy for $300, you can buy all these synthesizers. You can be a kid in your garage. You can make all the same sounds that I made on all those movies if you're creative and you start watching my tutorials that I'm going to be distributing through Digital-Tutors which just got bought up by Pluralsight. We're going to have instructional videos on how to go in and create the sub-base for the cling-on warships in "Star Trek".

Joey: Sold. That sounds awesome. Yeah.

Frank Serafine: We're going to show you step-by-step how I did it back then and then, I don't know how they're doing it on "Titanic", but I can show you how to do it for "Titanic".

Joey: Right.

Frank Serafine: I mean, not "Titanic" but "Martian". If you watched "Martian", it really emulates the spaceships that we did in the original "Star Trek" film.

Joey: I think it'll be interesting just to kind of hear maybe one sort of example walk through or something like, what are all the steps involved in making one sound? If it's the sound of someone's on a spaceship and they push a button and it turns on the computer and the computer is this super high-tech thing and lights turns on and there's graphics, how would you make that sound? How do you think about it? What tools would you use and how would it end up sounding the way it does in the film?

Frank Serafine: There's a lot of different elements that go especially when you're talking about beeps and telemetry. For instance, "The Hunt for Red October" which won the Oscar for all that stuff. Inspirationally, like right now, I'm going to show how to create those beeps and one of the best ways to create beeps that I love, that are unique and I do create many of them using a synthesizer, but I like to go out and record birds with a shotgun mic and then I bring those birds back. I chop them up in little pieces and you can't even tell that it's a bird, it sounds like a really super high-tech R2D2 beep.

Joey: You're trimming off the head and the tail of the sound and only keeping the middle?

Frank Serafine: Or keeping just the front so it has like a weird attack and then chopping the end off.

Joey: That's cool.

Frank Serafine: Then, just budding them all together so they go.

Joey: Okay. Then, let's say that that's a good base but then, you're like, oh, I need a little more low-end, it needs to feel a little fuller. What might you do then?

Frank Serafine: For instance, if I'm creating the sub-element for a big giant spaceship passed by, let's say, I will go with a Minimoog, I'll go to white noise or pink noise which is deeper than white noise. Then, I'll go the contour knobs and I'll bring down that rumble real nice and low, okay. Then, I'll just watch the picture and as the picture goes by, I'll bring in a little bit of modulation on the modulation wheel so it gives you this kind of like staticky rumble.

Joey: Got it. Even today, you're still sort of performing sounds like even though it's all happening now on a computer, I'm sure you're recoding through a computer using software emulators of this analog synthesizers.

Frank Serafine: Right.

Joey: You're still performing?

Frank Serafine: Right. Instead of doing it like I did it before which was take the Minimoog, perform it, dump it to a 24-track or Pro Tools or anything like that. Now, I'm using Logic X which is Apple's music software.

Joey: It's great, yup.

Frank Serafine: Then, I bring up my different synthesizers and samplers as a plug-in instruments and then, I just basically perform it in all the automation just like I did before using an Arturia computer controlled keyboard. I can turn the knobs and the automation is recorded right into Logic.

Everything that I do, now I'm back. It's like all of my old friends have reincarnated back into my computer and I can actually control all those knobs now right in front of me. They're all programmed for each one of those synthesizers Arturia has gone through and mapped out the knobs for all those synthesizers. That's a beautiful thing because every synthesizer now, I can just pull one up, start turning knobs. I can either make my own template or you can just go with the templates that Arturia has provided for those particular synthesizers, which has really come a long way and it's helping us be able to control those synthesizers like we use to.

Joey: How many, layers if you had a complicated sound effect like a spaceship flying by and I'm sure, there's little twinkling lights on it and there's an asteroid in the background, how many layers of sounds are typically present in a shot like that?

Frank Serafine: It can be up to like 300.

Joey: Wow.

Frank Serafine: Or it could be 10.

Joey: There's no rule, it's just whatever you need.

Frank Serafine: That's like when you watch this, where this new released Scott film, like he keeps some of the scenes are big giant like where the ship flies by and you get to hear the big orchestra and everything. Then, as the movie starts getting a little more complicated and a little more deserted, let's say, he starts taking out all that music. It becomes simpler and it's just the ship rumbling by. You just get this feeling of loneliness. It's really great. That becomes the orchestral process and then, that like the ship by, may only have one track. It's just be a ship by.

Joey: Got it. Yeah. I took one class at Boston University called sound design and it was really great. The biggest thing I took away was that a lot of sounds, a foot crunching on some ice. It's actually six sounds that are being combined that none of them are what they sound like when you put them together, if someone crushing up like some plastic or something and then, you take that shattering glass sound and put them together with the footstep and it sounds like someone walking on stuff. I assume that at the level of sound design you're talking about, there'll be lots and lots of that, but you're saying that it's not always the case sometimes one sound is enough.

Frank Serafine: Sometimes it will get paired down because on big movies, like we'll cover stuff like because you got to cover yourself when you get to the dub stage and the director goes, where are those twinkling lights? I know we're going to go a little bit sparse but I want to hear those twinkling lights. We will put all that in there and then, when you get to the dubbing stage, depending on story line and the way things are progressing and the score and all that stuff, stuff gets either added in, subtracted or blended together.

Joey: Got it.

Frank Serafine: You don't know. You have to be prepared to cover it all because you don't want to be at the mix on the Dolby Atmos Theater $1,000 an hour and find out you just need a couple twinkling bells.

Joey: Right. Let's talk about really quickly if anyone who's listening to this who is in the unenviable position of having to mix their own audio and not having a clue how to actually mix an audio. What are some really basic things that, I mean, audios are very deep black hole you could fall into. What are some basic things that someone who has a music track voice over and maybe some very simple sound effects? What are some things they can try maybe eq'ing certain ways or compression or any plug-ins that can help do this? What are some things that a novice could start doing to make their sound better?

Frank Serafine: I guess I really have to get back to basics again because most musicians and probably 99% of the guys out there, including professional editors, sound designers, we're talking about the whole bundle, none of them tune their rooms. You know what that means?

Joey: I've heard of tuning a room but no, I don't know what it means.

Frank Serafine: Okay. If you don't know your output level like for instance, Dolby comes in to a theater and the reason why you have to pay for the Dolby certification when you do a movie is that they guarantee that when it comes out of the mixing stage that you're mixing in and it goes to the actual Atmos theater or Dolby surround theater or stereo theater, it goes out to the festivals or whatever it's going to do, that it's tuned properly so that when you listen back in the theater, it's playing back at 82dB just like it was in the mixing stage with the director and editor and mixers. It ensures that whatever the mixer is doing is interpreted in the theater exactly the same way as it was in the mixing stage.

Joey: Got it.

Frank Serafine: What I need to stress to everybody out there, who wants to be able to get good sound especially out of their own home environment, which is the way a lot of people are doing it these days, you need to tune your room. You need to go down to Radio Shack before they go out bankrupt and pick up what's called a, it's a noise, a pink noise generator. What that does is it generates a pink noise that when you stand in your room at the sweet spot in your room, you listen back to your speakers at 82dB, you set your levels, your output levels, for theater listening. Okay, so that when you're sitting at your computer, your output is exactly 82dB so that when you start listening and eq'ing and doing all these processing, you're at the right level. Because if you're not at that correct level, it doesn't matter what you do, you're not going to get it right.

Joey: Why 82dB?

Frank Serafine: Because that's how you listen back in the theater. There's different levels for broadcast television and there's a different for web. If you're mixing for the theatre, you have to follow the spec, the Dolby spec. You have to call them up. They come over to your studio, they tune your room for you and they go, okay now you can mix. They stay there while you're mixing. It's their liability.

Joey: Yeah.

Frank Serafine: If you change a knob or left or something and it turned out, it screwed up the mix, then they're liable. Like I said, there's a lot of liability when it comes to this and I've worked with a lot of directors that want to work out of their bedrooms and edit on premier. That's fine. Make sure that you have somebody come over and tune the room so that you're listening to 82 so that when you go over to your sound editor or your editor's house or you go to the director's house everybody is listening back at the same decibel level, so that if they add something or they bring a level down or whatever that's so critical and nobody realizes how important it is.

Joey: Does tuning the room also involve the frequency response to the room and some rooms are echoing and some rooms have carpet all over the place so it's …

Frank Serafine: Yeah. It does. It sort of does. That's why you need to sit at the sweet spot and generally if there's echoing you want to be closer to your speakers so that you don't have any … if there's a way that you can baffle your speakers, that's a good one. The main thing is that you sit at the sweet spot and that you're tuned properly at the Dolby specification so that when you're mixing and you're collaborating with other editors and other mixing people and you're doing the work on your own computer, that it translates properly to the other rooms that you're going to be mixing in. Finally the dub stage.

Joey: What about something like a very common issue would be, you're given a voice over track, it was recorded very well and then you have a music track and that sounds great, some really awesome pieces of music and then you put them together and all of a sudden you can't understand the voice over. It's like, it's muddy. How can you deal with that?

Frank Serafine: Got to lower the music.

Joey: That's it? It's just volume? There's no …

Frank Serafine: You can EQ the voice to cut through. The way that films are done, it always starts with the dialogue.

Joey: Yup.

Frank Serafine: Okay. The mixer we start editing the dialogue. We clean up the dialogue the best we can and then it goes to a premix, which is where the dialogue editor goes through all the split tracks, the fill tracks, like every time you split or like for instance if you're ADR'ing character and the other is not, right, and you cut out a whole where one actor is going to be ADR'ed, well, you'll also take out all the ambiance. What you need to do is you need to go in and you need to fill what they call fill all the ambiance you need to go into the room somewhere, where usually they shoot room tone before they actually shoot the scene or after. Everybody shuts up on the set and they shoot room tone.

Joey: Yup.

Frank Serafine: If you need to grab a piece of that room tone, you go in and you cut that in the area of the slug of dialogue that you pulled out of that character, it fills the room just like it sounds behind you. Then you can loop your actor over that and it sounds realistic.

Joey: Got it. Is there any processing that you like to do? Again, I'm trying to think of things that are going to be relatively simple for a novice audio person to figure out. Some processing like compression or EQ that you can do at the end just to give your audio a little bit more crispness, give it a little bit more of that polish that you hear when you have the money to go to someone who knows what they're doing.

Frank Serafine: There's a lot of tools. There's a company I work with called iZotope. There's all this histogram, sophisticated spectral wave form editing which is basically Photoshop for audio. There's a really incredible scientific noise reduction system that I love using called SpectraLayers from Sony. What it does is it's basically, like I said, Photoshop for audio and you can actually go in and if you have a mic bump or for instance we can take sirens, police sirens, out of the production now where it goes in and it actually looks at the wave form in all the different colors and aspects of the audio and you can actually go in and smudge out like the police siren. We can take police sirens out of production dialogue. That was something we could never do before.

Joey: That's really cool. I overdo it but like I always put a little effect on my master track to just compress the audio and boost around 5K a little bit. That's just my little recipe that I like the way it sounds. Is there anything like that or does this make it … like even hearing me say that is that making you uncomfortable like, what's he doing?

Frank Serafine: I don't like compression to tell you the truth because compression is just a mechanical way to automate.

Joey: Yeah.

Frank Serafine: What I do is I ride the fader. If it needs to come up or down I do all that. I just do that in the editorial and automation process.

Joey: Yeah. Got you.

Frank Serafine: I follow the audio. I'm not going to give something an automatic compression. I just don't like the way that sounds.

Joey: Got you.

Frank Serafine: I never have. It's kind of weird. A lot of people are like wow, you don't use compressions, I'm like, no. I'm really old school when it comes to that. I just go in and map out my … I just do it automated, where it needs to compress I bring it down in the actual fader.

Joey: Got it. All right. I have a couple more of questions, Frank. You've been really generous with your time. One question I have is, if someone wanted to get started and start playing around with this stuff what gear would you recommend and I'm talking about headphones, speaker, software. What do you need just to get started and not to rival Skywalker sound or something like that?

Frank Serafine: It depends on what level. If you're an animator and you want to get into doing it on your own and then you might do it all on your own and then it might get a little more sophisticated. Once again, I'll have all the training videos on Pluralsight that will basically demonstrate from the prosumer all the way up to the total advanced professional user.

I would say that Audition from Adobe is a really good one for probably most the animators because it gives you the power of a lot this iZatope plug-ins that when you're professional you need to have. If you're the real super high end professional, yeah, you need to go out, you need to get a Pro Tools software.

I actually work with Mytech which is really super highly advanced super high quality audio interface gives you all the professional outputs that you need. There's a different levels. Like I said if, you're a prosumer you're going to stay in premier, you're going to send everything over with pluralize, you're going to work within an Audition you're going to get it to where you'll love it in Audition. If your film gets picked up and you go mix it, the thing is that Audition doesn't really support the audio community like in Hollywood. They're definitely an incredible program but you're not going to go to Universal Studios with an audio file.

Joey: Got it.

Frank Serafine: What you're going to need to do and I'll be showing how to do a lot of this stuff is getting all of your materials [inaudible 01:29:11] it over to Pro Tools, get it all set up, there's a lot of workflow setup that you need to do before you actually get to the stage. You need to bust all of your dialogue tracks to one channel. Your effects, your foley, your backgrounds, your music, all have to be bust. If you're coming in especially in these big films, you're going to have a hundred channels maybe more, a couple hundred channels worth of stuff and they all have to get segmented into what's called stems, where you have your dialogue stems, your music stem, your effect stem. That's what you finally do your final pre-mastered mix for when you go to the Atmos theatre. Then they all have to be organized in that fashion and then they also have to be delivered that way because not only is there the domestic release which is an English but then the movie goes to all the different countries like France and China and Japan. Then there's all the foreign released which is you take the dialogue stem out and you just leave your music and effects in.

What you need to do is make sure that once the dialogue comes out that there's a lot of work when you go to do what's called foreign release. Because when you take the English dialogue out of the track, you also take all the ambiance that was in that dialogue track out and all the foley and anything that may have been in that original production track comes out. You have to add all the cloth movement back in, all the footsteps have to go back in and that's one of the primary reasons that foley evolved in films is because when they went to foreign release and they took production out, they had to add all that background all that foley back in.

Joey: That's really interesting. It never actually occurred to me. That's pretty cool. What other types of things do you think are important for someone to have? Can you just use the speakers on your iMac to do this stuff? You need something a little more professional than that?

Frank Serafine: That's a really good question because the problem with listening to headphones or speakers on your iMac is that there's a compression algorithm that Apple does on that mini plug out of your computer and it compresses the audio.

If you're sitting there, and this is really important for people to know, do not ever mix on your laptop or your computer through the speakers or headphones because when you get to an audio interface and you listen, it is going to be sound so bad you're going to be pissed and you're going to love that I taught you about this. Because they compress the audio and it sounds wonderful when you're listening to it in your headphones, but they're compressing everything.

Joey: Right.

Frank Serafine: You're pushing your dialogue up wrong, you're pushing everything up to where it sounds good in compression but when you finally listen through the interface it sounds like Frankenstein.

Joey: What's like a dollar amount for getting a decent pair of speakers and maybe it sounds like you need some kind of USB interface to get the audio out uncompressed.

Frank Serafine: There's cheap ways of doing it. You can do it for really nothing. Zoom makes little consoles and interfaces. For $100 or $200 you just come out with your USB into these little boxes and then just plug your speakers and headphones into it.

Joey: What's a good price point for decent set of speakers?

Frank Serafine: The speakers I would say you got it probably … you're going to want something good. I work with a couple of different companies but I like ESI. They're really nice sounding speakers and they're small and they're nice just for desktop and they're very, very good sounding. There's a lot of different speakers. Most of the music speakers out there that you get are probably going to be fine. Speaker technologies come a long way.

That's not so much to worry as it needs to come out of the interface properly.

Joey: Got it. That's huge. I actually didn't realize that at all. I use a Focusrite little two channel interface and I think it might have been 150 bucks. It's not very expensive. The speakers I'm looking at those ESIs you just mentioned and I'm assuming you're talking about powered speakers.

Frank Serafine: Yeah, I'm for the powered speakers because you just put them on your desk and you're done.

Joey: Yeah. You don't need an amplifier or anything. Okay perfect.

Frank Serafine: Those little speakers are fine. Like I said, getting back to it, go out and pick yourself up one of those pink noise generators and then run that pink noise through an input channel of your console and set it at zero and then you bring up the output, which is your main output, in any bust that you might have. You set all those at zero then you sit exactly where you're going to be mixing and you run the pink generator at that 82dB. What you do is you raise the level of your speakers not your console, your console sitting at zero, you're running the speaker, you're running the pink noise into the room. It's being picked up by a microphone and then it's going back through your speakers. Then what you do is you adjust the level of the speaker to get to right to 82dB and that's how you tune your room.

Joey: How do you know that you're at 82dB? Do you need some device that measures loudness?

Frank Serafine: Yeah, the pink noise generator is going to … no, yes. I'm sorry. You need to have a dB reader.

Joey: dB reader, okay.

Frank Serafine: Not only do you need the pink noise generator but you also need the decibel reader.

Joey: That's interesting. I'm assuming both of those are fairly inexpensive, right?

Frank Serafine: Yeah. I mean I think it's like 30, 40 bucks for the dB readers, pink noise generator probably the same online. I have these things, I've had them for 30 years because I understand how important it is and most people don't even understand what that means.

Joey: For 500 bucks you can actually put together a way to tune the room to get your audio out clean and then to have some decent speakers that's pretty awesome.