If you want to improve your mograph work, you need to think like a cinematographer

Hey there motion designer! I bet that before you decided you could professionally operate 3D software, it was imperative to learn how to operate the camera as if you were an ASC-certified cinematographer, right?

Are those... crickets I hear?

You could call cinematography a “lost art” in mograph, but when was it ever considered an essential part of the motion design process in the first place? True, motion designers love to choreograph things —cameras most definitely included—but the meaning behind camera movements, lens choices, and lighting are often left behind.

Contrary to what you might think, it’s not really big sweeping camera moves that differentiate the best directors of photography (in this article, by the way, you’ll hear me use Director of Photography, DP, and cinematographer interchangeably). Study some of the best work and you’ll notice that—in addition to creating an aesthetic—the best DPs create a point of view and an emotion.

They do this not by showing off the scenery, but rather by choosing what to reveal—and maybe more importantly...what to conceal.

Let’s face it. For motion designers, the focus (pun intended) tends to favor showing off the graphics, so that they appear (and remain) aesthetically pleasing. Isn’t a consistently pretty image enough?

Thinking like a cinematographer lets you tell stories that have drama and emotional impact rather than admiring the scenery. To discover how to create cinema magic (if you already know how to operate a 3D camera), you can study how the most talented professional cinematographers plan and execute shots. This article primes you on a few basics to look for in your favorite films.

- How Cameras Behave in the Real World

- The Characteristics of Lenses

- The Implications of Shot Styles

- What Different Movements Mean to the Story...and the Audience

- Get the Camera Off the Sticks

{{lead-magnet}}

How Real Cameras Behave

One way to start thinking this way is to constrain your camera to only what is possible in the physical world. You may find that this alone forces you to be more creative and expressive with your virtual camera work. After all, it was Orson Welles, the great innovator of golden-age Hollywood cinematography (along with Citizen Kane DP Gregg Toland), who famously said that “The absence of limitations is the enemy of art.”

So what do you suppose might happen if you were to consider constraining more of what you do with your virtual camera to only what can be done with a real one? Would this automatically make you more creative with your camera work? Would the result have greater emotional impact than it would otherwise?

To help you consider how to even experiment with this concept, I present you with five essential choices regarding a shot that every DP (director of photography) makes, every time. These are so fundamental as to be universal, and yet I’m willing to bet they will seem utterly foreign to many motion designers. Let’s go through them, one by one.

Choose the appropriate focal length for the shot

What is the purpose of so many lenses?

Cinematographers certainly know their cameras, but believe it or not, the camera itself doesn’t get the most attention. Light and lenses matter much more because of how they are uniquely chosen for each shot. Listen in while a DP discusses lens choice with the first AC (the assistant camera operator who deals with lenses and focus). The conversation is reminiscent of, say, a pro golfer calling for a specific club from the caddy. Why?

Work with an actual professional camera (no fixed lens) and you will quickly discover that each lens has a unique character to shape and transform the shot. Nailing this flavor down precisely can be ineffable, like discerning some mystery spice.

Broadly speaking, however, lenses come in one of two types, prime (fixed length) or zoom (variable length). Both types of lens vary by length, and they are considered wide, standard, or telephoto (long).

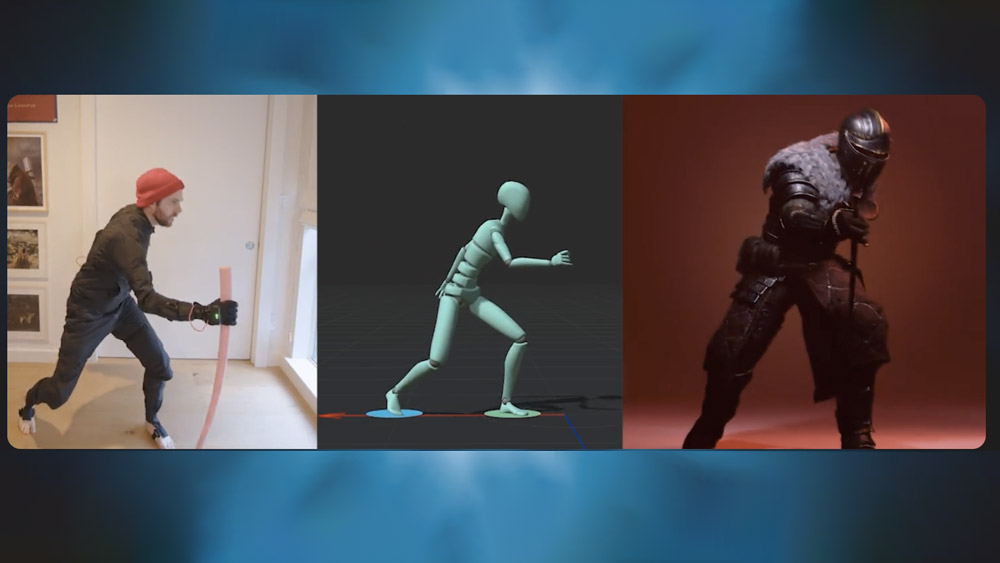

And here is how the cameras were positioned to capture the above shots. It would be essentially the same result with a camera and these lens angles in the real world.

Among the wide lenses are the extremely wide (macro and fisheye) usually used for specialty photography. The longer telephoto lenses include those massive models used to shoot sporting events, celebrities and other wildlife photography. A more modest-length telephoto is typically considered ideal for portraits, while wide angles are most often used for environments (interiors or landscapes), and a standard lens—well, you can think of it as akin to what you’d see with the naked eye, sort of. More about that in a moment.

Now we come to a somewhat confusing point. Virtual lens angles (from your 3D camera in C4D, Houdini, or After Effects) have no direct influence on the character of a shot. Rather, it is the position of the camera that changes the perspective. If you frame a wide and long shot with any 3D depth identically, they will look different, but that’s because the camera with the narrow (long) lens angle has to be much further away to be framed the same as the wide (short) lensed camera.

In the above example by photographer Dan Vojtěch, the character of the face changes dramatically with lens length—but what you don’t see is how dramatically the distance of the camera also varies to frame the shot identically.

Learn the character of the lens, and use its strengths and limitations to advantage

Why do real lenses change the character of the shot, but virtual ones don’t?

This is a trick question. The reason that lens distortion occurs with a physical lens but not a virtual one is that a virtual camera has no lens.

One of the reasons that a camera needs a lens is that it must bend light to widen or lengthen the camera’s view area. Lenses bend light, and a single lens contains multiple lens elements—individual curved pieces of glass—to resolve the image correctly.

With a virtual camera, “light” (the scene image) travels in a completely straight line directly to the plane where the image is gathered. Essentially, the scene is simply recorded at a given width, kind of like if you placed a frame in front of your eyes.

Everyone knows if you can’t see the camera lens (or eye), it can’t see you. If you’re standing alongside the camera, only a bulbed-out lens will include you in the image.

For a lens manufacturer, the goal is generally to distort the image as minimally as possible to provide this effect. But with sufficient widening of the image, extreme fish-eye lenses will distort more and more approaching the edges of the frame.

In After Effects, we can remove and recreate distortion from camera images with effects plug-ins (the built-in Optics Compensation, or perhaps the Lens Distortion effect from Red Giant). This process, and how and why you would do it, is beyond the scope of this article, but the Red Giant approach is designed to make the process of removing and restoring lens effects less complicated than with Optics Compensation.

Study the implications of a long, wide, or neutral shot

What does the camera lens do to the perspective of the shot?

Here is where things can get a little confusing. Again we are presented with somewhat of a trick question. Camera position is what truly changes the perspective of the shot. A different lens angle changes the framing of the shot. Two shots taken with identical framing but different positions must have—you guessed it—different angles of view.

Additionally, as we’ve just seen in the previous section, the lens that would deliver a specific angle of view has specific characteristics that change the shot in other ways, like adding lens distortion.

Side note: You will see the terms field of view, angle of view, focal length, and zoom amount used interchangeably. They all affect the same thing—how long or wide the shot is. With computers, angle of view (AoV) is the most consistently accurate since it’s not dependent on the size of the frame or length of the (imaginary) lens. You can specify a horizontal or vertical (or even diagonal) AoV, so make sure you know what you’re dealing with.

Going back to our cinematographers and photographers, who always seem to love collecting lenses (aka “glass”), you choose not only a certain lens length but a certain lens for its character. For example, a portrait photographer might choose to work with a 50-70mm lens (on a 35mm camera, this is slightly telephoto) because of how benevolent it is to facial features.

But a filmmaker who wants a stylized look for his characters might choose to break this rule. For example, Jean-Pierre Jeunet, director of Amélie (one of the earliest examples of surreal mograph-like story-driven effects in a narrative feature film), has made doing so something of a signature look.

Jeunet’s films are what you could call “very French”—stylized, with characters awkwardly thrust into absurd circumstances. Amelie is a beautiful film that—rather than flattering its characters with a typical slightly long close-up lens—is almost entirely shot with very wide lenses.

To frame a close-up requires that the camera be almost right up against the talent. The result is slightly claustrophobic, and it also distorts characters' faces into live-action characactures. Prominent gallic noses become even more so, wide eyes become wider.

The point isn’t to recommend shooting more films this way. Rather, it’s that the filmmaker widened his expressive palette for this masterful film by breaking convention and knowing what the lens can do. In the same film, the color grade is a (rare) red/green—generally the least popular/complimentary color option when it’s not Christmastime. Once again, a choice that wasn’t made just to be different—it suits the cheery tone of the movie.

Would you ever go so far as to add lens distortion to a purely computer-generated image? It’s not necessarily that you should but that you can, understanding what it does for the look and feel. Without the curvature, a wide-angle shot becomes less wide. In an FPS game, you may sometimes notice extreme linear stretching of the wide image in the corners; this is too ugly for most films to ever allow it, but works for the medium.

First-person action games (like Valorant, shown here) often use a view as wide as the GoPro, but without distortion. This can feel too angular and stilted for cinema.

The classic western shootout is captured with a long lens, a look popularized in the 1960s by Sergio Leone.

Filmmaker Jean Jeunet makes unconventional use of the wide-angle lens for character close-ups. It’s a flattering enough look on Audrey Tatou and adds a certain characature to some of the other more Gallic-looking figures.

To reframe in 3D for drama, dolly always, zoom (almost) never

Why dolly the camera instead of zooming in or out?

This is a very common point of confusion that is one of those things that, once you’ve seen it, you can’t help but notice it. Zooming the camera is no different than cropping the image.

It’s really that simple. Zooming only changes the framing of the shot. There is no change in perspective or relative scale.

There are a couple of things you should know about zooms, from a cinematic perspective. One is that for feature film (e.g. expensive looking) images, they have been out of fashion since at least the 1970s, with the exception of directors wanting to emulate that style to make a shot stand out (hello Quentin Tarantino).

Zooming in (and to a lesser extent, out), including the much beloved crash zoom “surprise!” shot, is a stable of mockumentary projects from Arrested Development to The Office. What you’ll notice about the zoom shot is that it calls attention to the camera itself and its operator.

In many professional applications, including live-action sports, the zoom is considered amateurish, for a least a couple of reasons. One is probably because this shot is a staple of camcorder home movies. Additionally, the zoom implies that the camera operator was caught by surprise, unprepared.

The human eye does not zoom. Our eyes show us depth of field, motion blur, perspective—many of the things we associate with the camera—but our eyes contain what you would consider prime, not zoom lenses. You want a closer look? Move in. We’ll get to that in a moment.

However, in a 2D environment there is no difference between a dolly move (moving the camera closer to or further from the subject, with or without an actual dolly, the wheeled conveyance for the camera) and a zoom. Moving in or zooming on a 2D image is no different than reframing (effectively cropping) that image. It’s only when you shoot in a 3D environment that the distinction becomes meaningful.

Get the camera off the sticks, and rarely pan

Why truck the camera instead of panning it?

And that brings us to the final point. It’s easier in the real world to park the camera on a tripod and pan (and zoom) around instead of moving it, and it’s similarly easier in the virtual world to effectively do the same thing, rotating the virtual camera instead of repositioning it. But in many cases it’s not what you want.

Novices to cinema sometimes say “pan” to mean any camera move. This may be because the common cinema terms—dolly for a move in/out, truck for a move left/right, pedestal for a move up/down—are based on the equipment used to create them on a film set.

Panning shots are most often slow establishing or transitional shots. Quick pans during a dramatic scene are in the same category as crash zooms—great mostly for comedic effect, for making us aware there is a camera operator trying to keep up with the spontaneous action.

Actual camera moves, meanwhile, are the very essence of cinema—be they handheld and chaotic feeling or smooth as steadicam silk. Not only that, but only by moving the camera through a dimensional environment do you make it possible for camera tracking software to do its job.

A nodal pan, in which the camera stays in place (on a tripod for a real world shot) gives no perspective. 3D tracking software can track the motion of the camera itself, but cannot provide any dimensional information about the scene until the camera moves.

Moving the camera adds dimension. But to do so, you must work with a dimensional environment. Here’s an example from VFX for Motion in which students tried to recreate a handheld camera against a 2D scene. The problem is that the background is created with dimensional perspective, but this is all just the 2D perspective of a painting.

Now you know it, so try it!

There are a couple of takeaways from this article I would love for you to put into play. One is simply to consider the camera as having a creature point of view. This could be a camera operator, witness, stalker, pet, handheld witness that almost missed the shot, baby, auteur paying hommage to a famous shot from a movie—whatever feels appropriate.

The other is that camera and lighting choices that obscure areas of the scene are almost always more cinematic than those that are afraid to do so. We didn’t really get into lighting or lens effects like shallow depth of field here, but you will notice them come into as you watch well shot films.

All of which you are encouraged to do!

(Need a refresher on camera moves and what they do? We've got you covered!)

VFX for Motion

If all this talk of virtual cameras and lenses got you inspired, maybe you're ready for an advanced technique training session. VFX for Motion will teach you the art and science of compositing as it applies to Motion Design. Prepare to add keying, roto, tracking, matchmoving and more to your creative toolkit.

ENROLL NOW!

Acidbite ➔

50% off everything

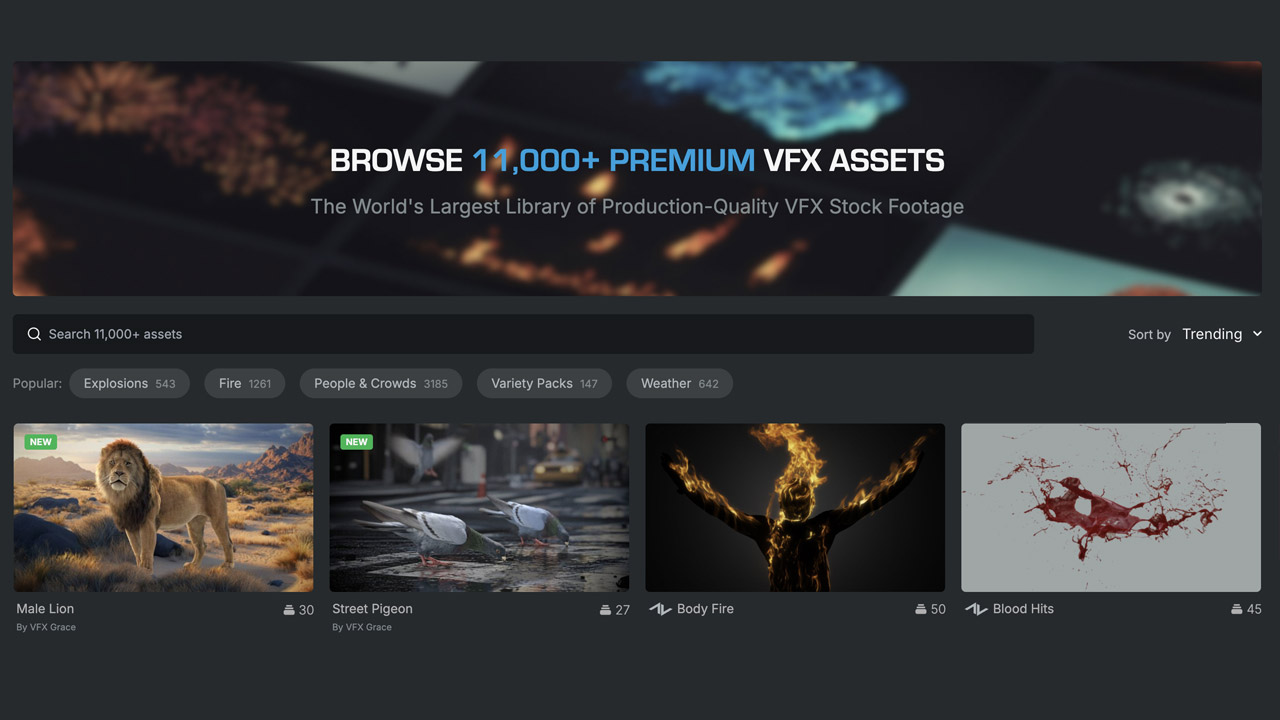

ActionVFX ➔

30% off all plans and credit packs - starts 11/26

Adobe ➔

50% off all apps and plans through 11/29

aescripts ➔

25% off everything through 12/6

Affinity ➔

50% off all products

Battleaxe ➔

30% off from 11/29-12/7

Boom Library ➔

30% off Boom One, their 48,000+ file audio library

BorisFX ➔

25% off everything, 11/25-12/1

Cavalry ➔

33% off pro subscriptions (11/29 - 12/4)

FXFactory ➔

25% off with code BLACKFRIDAY until 12/3

Goodboyninja ➔

20% off everything

Happy Editing ➔

50% off with code BLACKFRIDAY

Huion ➔

Up to 50% off affordable, high-quality pen display tablets

Insydium ➔

50% off through 12/4

JangaFX ➔

30% off an indie annual license

Kitbash 3D ➔

$200 off Cargo Pro, their entire library

Knights of the Editing Table ➔

Up to 20% off Premiere Pro Extensions

Maxon ➔

25% off Maxon One, ZBrush, & Redshift - Annual Subscriptions (11/29 - 12/8)

Mode Designs ➔

Deals on premium keyboards and accessories

Motion Array ➔

10% off the Everything plan

Motion Hatch ➔

Perfect Your Pricing Toolkit - 50% off (11/29 - 12/2)

MotionVFX ➔

30% off Design/CineStudio, and PPro Resolve packs with code: BW30

Rocket Lasso ➔

50% off all plug-ins (11/29 - 12/2)

Rokoko ➔

45% off the indie creator bundle with code: RKK_SchoolOfMotion (revenue must be under $100K a year)

Shapefest ➔

80% off a Shapefest Pro annual subscription for life (11/29 - 12/2)

The Pixel Lab ➔

30% off everything

Toolfarm ➔

Various plugins and tools on sale

True Grit Texture ➔

50-70% off (starts Wednesday, runs for about a week)

Vincent Schwenk ➔

50% discount with code RENDERSALE

Wacom ➔

Up to $120 off new tablets + deals on refurbished items